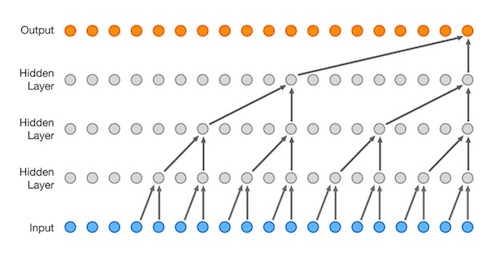

Using neural networks to create new music

Monday, June 5, 2017 at 18:01 tagged

Monday, June 5, 2017 at 18:01 tagged  deepmind,

deepmind,  generative,

generative,  network,

network,  neural,

neural,  neuralnetwork,

neuralnetwork,  wavenet

wavenet

Monday, June 5, 2017 at 18:01 tagged

Monday, June 5, 2017 at 18:01 tagged  deepmind,

deepmind,  generative,

generative,  network,

network,  neural,

neural,  neuralnetwork,

neuralnetwork,  wavenet

wavenet

Friday, August 28, 2015 at 12:24 tagged

Friday, August 28, 2015 at 12:24 tagged  ceramic,

ceramic,  chance,

chance,  france,

france,  generative,

generative,  water

water

Tuesday, November 26, 2013 at 11:17 tagged

Tuesday, November 26, 2013 at 11:17 tagged  apps,

apps,  driving,

driving,  generative,

generative,  iPhone,

iPhone,  music

music

There’s some music I associate with traveling by car. I don’t own a car and travel by public transport most of the time, so it’s mostly based around memories of sitting in the back of my parents’ car, listening to Phil Collins, Crowded House and the like. But I do ‘get’ what people call “driving music”. Some music’s just better suited to drive to.

Volkswagen played on this concept, taking driving music further. Collaborating with dance music artists Underworld and audio specialist Nick Ryan, maybe best known for his 3D audio game Papa Sangre, they created an app which reads different data streams from a smartphone which are then used to generate the music. So when you’re slowly driving along a country road on a rainy thursday morning, the music’s going to sound a whole lot different than if you’re speeding down the motorway on your way home that night.

I think it’s good to see technologies like this that have been around in more open-source efforts like MobMuPlat being used by R&D departments of bigger companies to bring new experiences like these to a broader audience. The app isn’t commercially available yet, but they are inviting people to “play the road” themselves.